Topics 2020.02.10

Atmospheric measurement techniques by deep learning

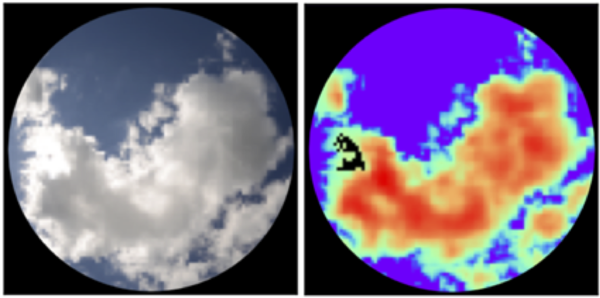

Figure 1. Estimation of cloud optical thickness (right) from camera image (left) using deep learning (Masuda et al., 2019).

A method for estimating the high-resolution spatial distribution of clouds from sky camera images using deep learning has been published in the journal Remote Sensing (Masuda et al., 2019).

The use of deep learning for atmospheric remote sensing began in 2016. Around May 2016, "Alpha Go" defeated a professional Go player and became a hot topic in the media. I saw in a newspaper that the technology used was "deep reinforcement learning". At that time, Rintaro Okamura, a second-year student in the master's program, said, "The deep learning framework used in Alpha Go has been publicly released and may be used for my own research". At that time, he was working on the development of a satellite remote sensing method for three-dimensional clouds based on a three-dimensional atmospheric radiative transfer model. When I heard that, I thought, "Deep? Learning? ... What is that?" And I went back to my desk and did a web search. Then, I learned that deep learning is a series of technologies for training multilayer (deep) neural networks, and is the core technology of the 3rd artificial intelligence boom that is currently flourishing. Several papers have been published from 2002 to 2007 on the research using neural networks for remote sensing of three-dimensional clouds. Previously, neural networks with a depth of about three layers were used, and the scale of problems that could be handled and the amount of data were much smaller than now. I thought that we could do something more advanced because we could now use a multi-layer convolutional neural network, and said, "The idea we've been talking about before may be good. Let's do it." After purchasing a GPU computer, Mr. Okamura has excellent skills and ability to incorporate new technologies, so he completed his research in about half a year and published it in the academic journal Atmospheric Measurement Techniques in September 2017 after completing his master's course (Okamura et al. 2017). Although it is possible to simulate satellite images that are similar to observation from satellites using a three-dimensional atmospheric radiative transfer model, estimation of the properties of three-dimensional clouds from satellite observation data takes too much computation time, and a practical method was required but not available. It was a breakthrough work using deep learning.

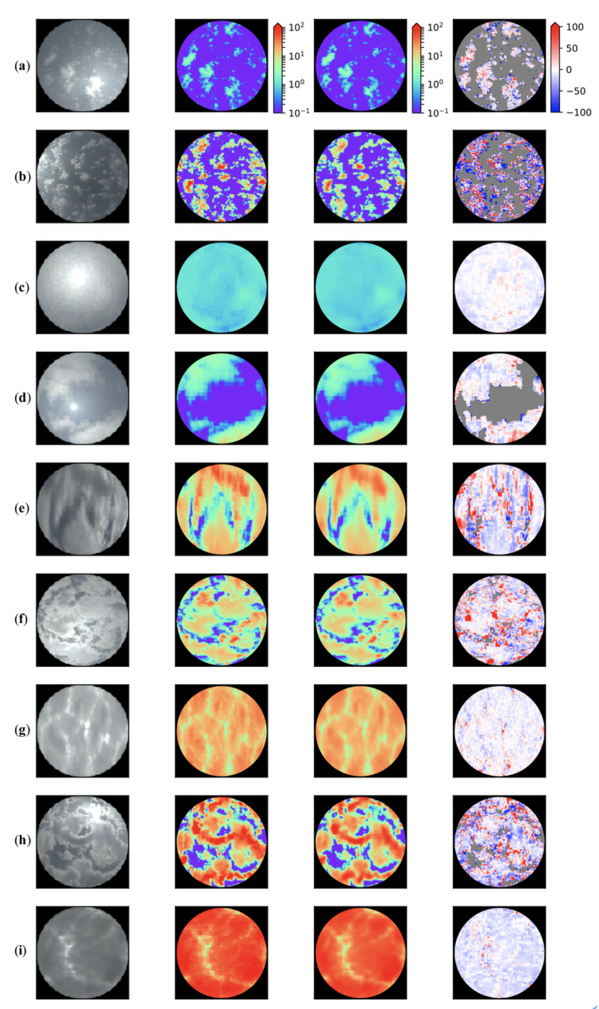

Ryosuke Masuda's research began with the objective of cloud observation from the ground, inheriting the research of Mr. Okamura. Cloud observation is useful for data assimilation of weather forecast models and prediction and diagnosis of solar power generation. The resarch target was estimation of the spatial distribution of clouds from ground cameras, and a large number of synthtic camera images were created using a supercomputer and physics-based models. The approach of training a deep learning model using physics models is the same as Mr. Okamura's. Deep learning technology has made remarkable progress since 2016. Inventions such as the residual network and batch normalization made the neural network deeper and more accurate, with the help by development of problem-specific architectures. Mr. Masuda succeeded in obtaining the spatial distribution of the optical thickness of clouds for 128x128 pixels from the image data acquired by the camera through a 28-layer convolutional neural network (CNN) using the latest techniques. Performance was evaluated using the synthesized data obtained by model calculation, and it was shown that the effects of complicated three-dimensional radiative transfer depending on various conditions were accurately represented. As an initial test, the cloud optical thickness was actually estimated from digital camera images, and good results were obtained in comparison with pyranometer observations. This study provided some insight into why CNN works well for estimating cloud distribution from observed images. CNN estimates the cloud distribution using the spectral characteristics contained in the multi-channel camera image and the spatial features and spatial context of the clouds, so that, for example, even if the observed data has some bias, the use of spatial features enable to estimate cloud characteristics from the image data, and is less susceptible to noise and calibration errors in observation data. The robustness of this CNN is very important when applied to real observations. This research has made it possible to measure clouds quantitatively using inexpensive cameras. The paper was published in an academic journal in August 2019 and was selected as the cover page for the journal (Masuda et al., 2019). As of January 2020, there have already been 560 full text views and 906 abstract views.

Due to the successful industrial use of artificial intelligence, deep learning technology has achieved remarkable development and is being used in various scientific research fields. Machine learning, such as deep learning, enables highly accurate approximation of complex physics models, and is useful as a tool for solving difficult problems. Generally, it can be used for estimation, prediction, detection, identification, and classification problems. One of the main applications in the sciences of atmosphere, ocean and land is remote sensing. There are two approaches: training using only observation data as training data, and training using data obtained by simulating observation using some physics model. Other major applications include statistical weather and climate forecasting, super-resolution (downscaling) of low-resolution simulations, approximation of high-cost physical models (highly accurate but with high computational cost), parameterization of physical processes that cannot be represented by low-resolution models. The research is currently being actively conducted by domestic and foreign researchers. According to the investigation results of trends at academic conferences and meeting, the important key that researchers are currently focusing on is how to incorporate physical constraints such as energy conservation into machine learning. Many would be common in various fields of research other than geophysics. It is expected that breakthroughs will continue in coming years.

Hironobu Iwabuchi, Assosiate Professor (Center for Atmsopheric and Oceanic Studies, Radiation and Climate Physics Group)

Publication

Okamura, R., H. Iwabuchi, K. S. Schmidt (2017): Feasibility study of multipixel retrieval of optical thickness and droplet effective radius of inhomogeneous clouds using deep learning, Atmos. Meas. Tech., 10, 4747-4759, doi:10.5194/amt-2017-154

https://www.atmos-meas-tech-discuss.net/amt-2017-154/

Masuda, R., H. Iwabuchi, K. S. Schmidt, A. Damiani, and R. Kudo (2019): Retrieval of cloud optical thickness from sky-view camera images using a deep convolutional neural network based on three-dimensional radiative transfer. Remote Sens., 11, 1962; doi:10.3390/rs11171962

https://www.mdpi.com/2072-4292/11/17/1962

Figure 2. An example of estimating the optical thickness of cloud using a convolutional neural network. (Left) Synthetic camera image created by simulation; (Middle left) Ground truth value of cloud optical thickness; (Middle right) Estimated cloud optical thickness; (Right) Cloud optical thickness estimation error. (from Masuda et al., 2019, Creative Commons Attribution License)